![]()

THIS IS AN ARCHIVE OF THE CMMIFAQ c.2014. IT IS NOT MAINTAINED AND MOST LINKS HAVE BEEN DISABLED.

CLICK HERE TO GO BACK TO THE CURRENT CMMIFAQ.

Welcome to our brutally honest, totally hip CMMIFAQ.

We're probably going to make as many enemies as friends with this FAQ, but hey, we expect it to be worth it. :-)

We also did a bit of research and found it pretty hard (if not impossible) to find this kind of information anywhere else on the web. So anyone who has a problem with our posting this information is probably the kind of person who wants you to pay to get it out of them before you have enough information to even make a good decision. But we digress... We do that a lot.

This site was designed to provide help with CMMI for people who are researching, trying to get to "the truth" about CMMI, or just looking for answers to basic, frequently asked questions about CMMI and the process of having an appraisal for getting a level rating (or CMMI certification as some people (inaccurately) prefer to say).

The information on this site has also been demonstrated to provide answers and new insights to people who are already (or thought they were) very familiar with CMMI and the appraisal. Feedback has indicated that there is more than a fair amount of incomplete and actual incorrect information being put forth by supposed experts in CMMI.

Your feedback is therefore very important to us. If you have any suggestions for other questions, or especially corrections, please don't hesitate to send them to us.

This is a work-in-progress, not all questions have been answered yet -- simply a matter of time to write them, not that we don't know the answers -- but we didn't want to keep you waiting, so we're starting with that we have.

For your own self-study, and for additional information, the source material for many of the answers on CMMI come from the CMMI Institute. They're not hiding anything; it's all there.

We've broken up the FAQs into the following sections (there will be much cross-over, as can be expected):

- WHAT is CMMI?

- Is CMMI for us?

- Is CMMI dead?

- How many processes are there in CMMI?

- How are the processes organized?

- What is each process made up of?

- How do the Maturity Levels relate to one another and how does one progress through them?

- What's the difference between Staged and Continuous?

- What's the difference between Maturity Level and Capability Level?

- What are the Generic Goals?

a.k.a. What are the differences among the Capability Levels?

a.k.a. What do they mean when they say process institutionalization? - What's High Maturity About? a.k.a. What's the fuss about High Maturity Lead Appraisers?

- What's a Constellation?

- How many different ways are there to implement CMMI?

- Do we have to do everything in the book? Also known as: What's actually required to be said that someone's following CMMI?

- Why does it cost so much?

- Why does it take so long?

- Why would anyone want to do CMMI if they didn't have to do it to get business?

- Isn't CMMI just about software development?

- What's the difference between CMMI v1.1 and v1.2?

- What's the difference between CMMI v1.2 and v1.3?

- What's the key limitation for approaching CMMI?

- What's the key effort required in CMMI implementation?

- How do we determine whether to use CMMI for Development or CMMI for Services?

a.k.a. What's the fuss about the informative materials in the High Maturity process areas?

- How do we get "certified"?

- How long does it take?

- How much does it cost?

- What's involved (in getting a rating)?

- How does the appraisal work?

- What's a SCAMPI?

- Who can do the appraisal?

- Can we have our own people on the appraisal?

- Can we have observers at the appraisal?

- What sort of evidence is required by the appraisal?

- How much of our company can we get appraised?

- How many projects (basic units) need to be appraised?

- Can we have more than one appraisal and inch our way towards a rating?

- If we go for a "level" now, do we have to go through it again to get to the next "level"?

- How long does the "certification" last?

- What is the difference between SCAMPI Class A, B and C appraisals?

- How do we pick a consultant or lead appraiser?

- Where can we see a list of organizations that have been appraised?

- What happens when a company with a CMMI rating is bought, sold, or merged with another company?

- What's the official record of the appraisal results?

- Can we go directly to (Maturity or Capability) Level 5?

- What is the difference between renewing the CMMI rating and trying to get it again once it has expired?

- Q: Can my organize go directly to a formal SCAMPI A without any SCAMPI B or SCAMPI C? Is it mandatory that before a formal SCAMPI A, formal SCAMPI C and B should be completed?

CMMI, Agile, Kanban, Lean, LifeCycles and other Process Concepts FAQs

- What if our development life cycle doesn't match-up with CMMI's?

- Doesn't the CMMI only work if you're following the "Waterfall" model?

- How does CMMI compare with ISO/TL 9000 and ITIL?

- Aren't CMMI and Agile / Kanban / Lean methods at opposite ends of the spectrum?

- How are CMMI and SOX (SarBox / Sarbanes-Oxley) Related?

SEI / CMMI Institute & Transition Out of SEI FAQs

- Why is CMMI Being Taken Out of the SEI?

- Who Will Operate the CMMI

- What Will Happen to CMMI? Will CMMI Continue to be Supported?

- Will CMMI Change? What's the Future of CMMI?

- Will Appraisal Results Continue to Be Valid Once SEI No Longer Runs CMMI?

- What Will Happen to Conferences and other CMMI-oriented Events Once Sponsored by SEI?

- Will We Still Be Able to Work with Our Current "SEI Partner"?

- Isn't this just a cash cow for the SEI?

- What makes SEI the authority on what are "best practices" in software?

- Do the Lead Appraisers work for the SEI?

- What's a "Transition Partner"?

- What's the "Partner Network"?

- How do we report concerns about ethics, conflicts of interest, and/or compliance?

- Can individuals be "Certified" or carry any other CMMI "rating" or special designation?

Training FAQs

Specific Model Content FAQs

- What is the exact difference between GP 2.8 and GP 2.9?

- Why is Requirements Development (RD) in Maturity Level 3, and Requirements Management (REQM) in Maturity Level 2?

- GP 2.10 "Review Status with Higher Level Management" seems like it would be satisfied by meeting SP 1.6 and 1.7 in PMC but that doesn't seem to meet the institutionalization. Would the OPF and OPD SPs also need to be met to meet GP 2.10?

A: CMMI stands for "Capability Maturity Model Integration". It's the integration of several other CMMs (Capability Maturity Models). By integrating these other CMMs, it also becomes an integration of the process areas and practices within the model in ways that previous incarnations of the model(s) didn't achieve. The CMMI is a framework for business process improvement. In other words, it is a model for building process improvement systems. In the same way that models are used to guide thinking and analysis on how to build other things (algorithms, buildings, molecules), CMMI is used to build process improvement systems.

It is NOT an engineering development standard or a development life cycle. Please take a moment to re-read and reflect on that before continuing.

There are currently three "flavors" of CMMI called constellations. The most famous one is the CMMI for Development -- i.e., "DEV". It has been around (in one version or another) for roughly 10 years and has been the subject of much energy for over 20 years when including its CMM ancestors.

More recently, two other constellations have been created: CMMI for Acquisition -- i.e., "ACQ", and CMMI for Services -- i.e., "SVC". All constellations share many things, but fundamentally, they are all nothing more than frameworks for assembling process improvement systems. Each constellation has content that targets improvements in particular areas, tuned to organizations whose primary work effort either:

- Develops products and complex services, and/or

- Acquires goods and services from others, and/or

- Provides/ delivers services.

NONE of the constellations actually contain processes themselves. None of them alone can be used to actually develop products, acquire goods or fulfill services. The assumption with all CMMIs is that the organization has its own standards, processes and procedures by which they actually get things done. The content of CMMIs are to improve upon the performance of those standards, processes and procedures -- not to define them.

Having said that, it should be noted that there will (hopefully) be overlaps between what any given organization already does and content of CMMIs. This overlap should not be misinterpreted as a sign that CMMI content *is*, in fact, process content. It can't be over-emphasized, CMMIs, while chock-full-o examples and explanations, do not contain "how to" anything other than building improvement systems. The overlap is easy to explain: activities that help improve a process can also be activities to effectively perform a process, and, not every organization performs even the basic activities necessary to perform the process area well. So, to one organization, what seems trivial and commonplace, to another is salvation from despair.

Another way to look at CMMIs are that they focus on the business processes of developing engineered solutions (DEV), acquiring goods and services (ACQ) and delivering services (SVC). To date, CMMI has most widely applied in software and systems engineering organizations. Now, with the expansion of the constellations, where it is applied is a significantly distinct matter from being anything even remotely akin to a standard or certification mechanism for the engineering, methods, technologies, or accreditation necessary to build stuff, buy stuff or do stuff, . If an organization chose to do so, CMMI could be applied in the construction or even media production industries. (Exactly, how would be an *entirely* different discussion!)

Before we get too off-track... CMMI is meant to help organizations improve their performance of and capability to consistently and predictably deliver the products, services, and sourced goods their customers want, when they want them and at a price they're willing to pay. From a purely inwardly-facing perspective, CMMI helps companies improve operational performance by lowering the cost of production, delivery, and sourcing.

Without some insight into and control over their internal business processes, how else can a company know how well they're doing before it's too late to do anything about it? And if/ when they wait until the end of a project or work package to see how close/far they were to their promises/expectations, without some idea of what their processes are and how they work, how else could a company ever make whatever changes or improvements they'd want/need to make in order to do better next time?

CMMI provides the models from which to pursue these sorts of insights and activities for improvement. It's a place to start, not a final destination. CMMI can't tell an organization what is or isn't important to them. CMMI, however, can provide a path for an organization to achieve its performance goals.

Furthermore, CMMI is just a model, it's not reality. Like any other model, CMMI reflects one version of reality, and like most models, it's rather idealistic and unrealistic -- at least in some ways. When understood as *just* a model, people implementing CMMI have a much higher chance of implementing something of lasting value. As a model, what CMMI lacks is context. Specifically, the context of the organization in which it will be implemented for process improvement. Together with the organization's context, CMMI can be applied to create a process improvement solution appropriate to the context of each unique organization.

Putting it all together: CMMI is a model for building process improvement systems from which (astute) organizations will abstract and create process improvement solutions that fit their unique environment to help them improve their operational performance.

At the risk of seeming self-serving, the following addresses the question of what CMMI is:

Keys to Enabling CMMI.

A: We should start the answer to this question with a quick sentence about what CMMI itself *is*.

CMMI is about improving performance through improving operational processes. In particular, it's improving processes associated with managing how organizations develop or acquire solution-based wares and define and deliver their services. So we should ask you a question before we answer yours: Do you feel that you ought to be looking at improving your processes? What business performance improvements would you like to see from your operations?

SO, is CMMI right for you? Obviously this depends on what you're trying to accomplish. Sometimes it's best to "divide and conquer". So we'll divide the world into two groups: those who develop wares and provide services for US Federal agencies (or their prime contractors) and those who don't.

Those of you in the former group will probably come across CMMI in the form of a pre-qualifier in some RFP. As such, you're probably looking at the CMMI as a necessary evil regardless of whether or not you feel your processes need to be addressed in any way. If you're in this group, there aren't many loop-holes.

One strong case for why your company might not need to mess with CMMI would be if you are selling a product of your own specification. Something that might be called "shrink-wrapped" or even COTS (Commercial Off-The-Shelf). While looking at CMMI for process improvement wouldn't be a bad idea, the point is that unless you are developing wares from scratch to a government (or a Prime's) specification, you ought to be able to elude having someone else require or expect you to pursue CMMI practices when you otherwise might not do so.

A couple of exceptions to this "rule of thumb" would be (a) if you are entering into the world of custom wares for the Feds, even though you currently aren't in it, and/or (b) if the extent to which your product might need modifications or out-of-spec maintenance for it to be bought/used by the government. Governments have an all-too-regular habit of buying a product "as is" functionally, and then realizing that what they need kinda only looks like the original product but is really different. Knowing this, many agencies and prime contractors are using the CMMI's appraisal method (called "SCAMPI") as part of their due diligence before wedding themselves to a product or vendor.

If you're in the latter group, (remember... those who don't sell to the Feds or their Primes) then the question is really this, "what's not working for you with your current way of running your operation?" You'll need to get crystal clear about that. Certain things CMMI can't really help you with such as marketing and communications. OK, it could, but if managing your customers and marketing are your biggest challenges, you've got other fish to fry and frying them with CMMI is a really long way around to get them into the pan. Don't get us wrong, there are aspects of CMMI that can be applied to anything related to *how* you do business. But, if you are worrying about where the next meal is coming from, you might be hungry for a while before the ROI from CMMI will bring home the bacon. It usually takes a number of months.

Having said that... If you're finding that

- customer acquisition, satisfaction, or retention, and/or

- project success, profitability, predictability, or timeliness, and/or

- employee acquisition, satisfaction, or retention, and/or

- service level accuracy, predictability, cycle or lead time

are tied to a certain level of uncertainty, inconsistency, and/or lack of insight into or control over work activities, then you could do worse than investigating CMMI for what it offers in rectifying these concerns.

Is CMMI Dead?

A: NO.

NOTE: This answer assumes you know a thing or two about CMMI, so we won't be explaining some terms you'll find answered elsewhere in this FAQ.

As of this writing, after the 2013 conference and workshop held by the newly-formed CMMI Institute, we can unequivocally state the rumors of the CMMI's demise are greatly exaggerated. The Institute hired a firm to conduct an independent market survey, the Partner Advisory Board conducted a survey of Institute Partners and their sponsored individuals, and, one of the Partners even took it upon themseleves to hire a firm to directly contact at least 50 companies who used CMMI. The interesting finds from these surveys and market data are that use of CMMI for actual improvement (not just ratings) are on the rise. Furthermore, that CMMI for Services is picking-up more users, and, it seems CMMI-SVC getting some users to convert over from CMMI-DEV (which partially explains a drop in CMMI-DEV).

In the US, the DOD no longer mandates use of CMMI as a "minimum pre-qualification", but it does view CMMI as a +1 benefit in offerors' proposals. In addition, most (if not all) of the "big integrators", defense, infrastructure and aerospace firms who use CMMI continue to use and expect the use of CMMI by their subcontractors.

In short, CMMI is far from dead, and, with new initiatives (in content and appraisal approaches) under way and planned for at the CMMI Institute, the relevance and applicability of CMMI to the broader market is expected to pick up again over the coming years.

How many processes are there in CMMI?

A: NONE. Zero. Zip. Nada. Rien. Nil. Bupkis. Big ol' goose-egg. There's not a single process in all of CMMI. They're called Process Areas (PAs) in CMMI, and we're not being obtuse or overly pedantic about semantics. It's an important distinction to understand between processes and Process Areas (PAs).

So, there are *no* processes in CMMI. No processes, no procedures, no work instructions, nothing. This is often very confusing to CMMI newcomers. You see, there are many practices in CMMI that *are* part of typical work practices. Sometimes they are almost exactly what a given project, work effort, service group or organization might do, but sometimes the practices in CMMI sound the same as likely typical practices in name only and the similarity ends there. Despite the similar names used in typical work practices and in CMMI, they are *not* to be assumed to be referring to one-in-the-same activities. That alone is enough to cause endless hours, days, or months of confusion. What CMMI practices are, are practices that improve existing work practices, but do not *define* what those work practices must be for any given activity or organization.

The sad reality is so many organizations haven't taken the time to look at and understand the present state of their actual work practices, so as a result not only do they not know everything they would need to know to merely run their operation, they then look to CMMI as a means of defining their own practices! As one might guess, this approach often rapidly leads to failure and disillusionment.

How you run your operation would undoubtedly include practices that may happen at any point and time in an effort and during the course of doing the work. Irrespective of where these activities take place in reality, the CMMI PAs are collections of practices to improve those activities. CMMI practices are not to be interpreted as being necessarily in a sequence or to be intrinsically distinct from existing activities or from one CMMI practices to another. Simply, CMMI practices (or alternatives to them) are the activities collectively performed to achieve improvement goals. Goals, we might add, that ought to be tied to business objectives more substantial than simply achieving a rating. There's so much more to say here, but it would be a site unto itself to do so. Besides, we never answered the question....

... in the current version of CMMI for DEVELOPMENT (v1.3, released October 2010) there are 22 Process Areas. (There were 25 in v1.1, and also 22 in v1.2.) CMMI v1.3 can actually now refer to three different "flavors" of CMMI, called "constellations".

CMMI for Development is one "constellation" of PAs. There are two other constellations, one for improving services, and one for acquisition. Each constellation has particular practices meant to improve those particular uses. CMMI for Acquisition and CMMI for Services are now all at v1.3. While much of the focus of this list is on CMMI for Development, we're updating it slowly but surely to at least address CMMI for Services, too.

Meanwhile, we'll just point out that the three constellations share 16 "core" process areas; CMMI for Development and for Services share the Supplier Agreement Management (SAM) process area. The CMMI for Acquisition has a total of 21 PAs, and Services has a total of 24 PAs. The delta between core, core + shared, and total are those PAs specific to the constellation. More on that later.

We would like to thank our friend, Saif, for pointing out that our original answer was not nearly doing justice to those in need of help. The update to this answer was a result of his keen observation. Thanks Saif!

The Process Areas of CMMI are listed below. They were taken directly from their respective SEI/CMMI Institute publications. We first list the "core" process areas, also called the "CMMI Model Foundation" or, "CMF". Then we list the process area shared by two of the constellations, DEV and SVC, then we list the process areas unique to each of the three constellations, in order of chronological appearance: DEV, ACQ, then SVC.

All the PAs are listed in alphabetical order by acronym, and for those who are interested in Maturity Levels, we include in brackets '[]' which Maturity Level each PA is part of. We're also listing the purpose statement of each one.

We should also note that in process area names, purpose statements, and throughout the text, in CMMI for Services, the notion of "project" has largely been replaced with the notion (and use of the term) "work". For example, in CMMI for Services, "Project Planning" becomes "Work Planning", and so forth. The rationale for that is the result of months of debate over the relevance and subsequent confusion over the concept of a "project" in the context of service work. While the concept of a "project" *is* appropriate for many types of services, it is quite inappropriate for most services, and, substituting the notion (and use of the term) "work" for "project" has effectively zero negative consequences in a service context.

This may raise the question of why not merely replace "work" for "project" in all three constellations? In the attitude of this CMMIFAQ, our flippant answer would be something like, "let's take our victories where we can get them and walk away quietly", but a more accurate/appropriate answer would be that product development and acquisition events are generally more discrete entities than services, and the vast majority of product development and acquisition events are, in fact, uniquely identified by the notion of a "project". Furthermore, there is nothing in the models that prevent users from restricting the interpretation of "project" or "work". It's just that re-framing "project" and "work" in their respective contexts made sense in a broader effort to reduce sources of confusion.

Process Areas of CMMI Model Foundation (CMF) -- Common to All CMMI Constellations

-

Causal Analysis & Resolution, [ML 5]

The purpose of Causal Analysis and Resolution (CAR) is to identify causes of defects and other problems and take action to prevent them from occurring in the future.

-

Configuration Management, [ML 2]

The purpose of Configuration Management (CM) is to establish and maintain the integrity of work products using configuration identification, configuration control, configuration status accounting, and configuration audits.

-

Decision Analysis & Resolution, [ML 3]

The purpose of Decision Analysis and Resolution (DAR) is to analyze possible decisions using a formal evaluation process that evaluates identified alternatives against established criteria.

-

Integrated Project Management, [ML 3]

The purpose of Integrated Project Management (IPM) is to establish and manage the project and the involvement of the relevant stakeholders according to an integrated and defined process that is tailored from the organization's set of standard processes.

-

Measurement & Analysis, [ML 2]

The purpose of Measurement and Analysis (MA) is to develop and sustain a measurement capability that is used to support management information needs.

-

Organizational Process Definition, [ML 3]

The purpose of Organizational Process Definition (OPD) is to establish and maintain a usable set of organizational process assets and work environment standards.

-

Organizational Process Focus, [ML 3]

The purpose of Organizational Process Focus (OPF) is to plan, implement, and deploy organizational process improvements based on a thorough understanding of the current strengths and weaknesses of the organization's processes and process assets.

-

Organizational Performance Management, [ML5]

The purpose of Organizational Performance Management (OPM) is to proactively manage the organization's performance to meet its business objectives.

-

Organizational Process Performance, [ML 4]

The purpose of Organizational Process Performance (OPP) is to establish and maintain a quantitative understanding of the performance of the organization's set of standard processes in support of quality and process-performance objectives, and to provide the process performance data, baselines, and models to quantitatively manage the organization's projects.

-

Organizational Training, [ML 3]

The purpose of Organizational Training (OT) is to develop the skills and knowledge of people so they can perform their roles effectively and efficiently.

-

Project Monitoring and Control, [ML 2]

The purpose of project Monitoring and Control (PMC) is to provide an understanding of the ongoing work so that appropriate corrective actions can be taken when performance deviates significantly from the plan.

-

Project Planning, [ML 2]

The purpose of Project Planning (PP) is to establish and maintain plans that define project activities.

-

Process and Product Quality Assurance, [ML 2]

The purpose of Process and Product Quality Assurance (PPQA) is to provide staff and management with objective insight into processes and associated work products.

-

Quantitative Project Management, [ML 4]

The purpose of Quantitative Project Management (QPM) is to quantitatively manage the project's defined process to achieve the project's established quality and process-performance objectives.

-

Requirements Management, [ML 2]

The purpose of Requirements Management (REQM) is to manage requirements of the products and product components and to identify inconsistencies between those requirements and the work plans and work products.

-

Risk Management, [ML 3]

The purpose of Risk Management (RSKM) is to identify potential problems before they occur so that risk-handling activities can be planned and invoked as needed across the life of the product or project to mitigate adverse impacts on achieving objectives.

Shared by CMMI for Development and CMMI for Services

-

Supplier Agreement Management, [ML 2]

The purpose of Supplier Agreement Management (SAM) is to manage the acquisition of products from suppliers.

Process Areas Unique to CMMI for Development

-

Product Integration, [ML 3]

The purpose of Product Integration (PI) is to assemble the product from the product components, ensure that the product, as integrated, functions properly, and deliver the product.

-

Requirements Development, [ML 3]

The purpose of Requirements Development (RD) is to produce and analyze customer, product, and product component requirements.

-

Technical Solution, [ML 3]

The purpose of Technical Solution (TS) is to design, develop, and implement solutions to requirements. Solutions, designs, and implementations encompass products, product components, and product-related lifecycle processes either singly or in combination as appropriate.

-

Validation, [ML 3]

The purpose of Validation (VAL) is to demonstrate that a product or product component fulfills its intended use when placed in its intended environment.

-

Verification, [ML 3]

The purpose of Verification (VER) is to ensure that selected work products meet their specified requirements.

Process Areas Unique to CMMI for Acquisition

-

Agreement Management, [ML 2]

The purpose of Agreement Management (AM) is to ensure that the supplier and the acquirer perform according to the terms of the supplier agreement.

-

Acquisition Requirements Development, [ML 2]

The purpose of Acquisition Requirements Development (ARD) is to develop and analyze customer and contractual requirements.

-

Acquisition Technical Management, [ML 3]

The purpose of Acquisition Technical Management (ATM) is to evaluate the supplier's technical solution and to manage selected interfaces of that solution.

-

Acquisition Validation, [ML 3]

The purpose of Acquisition Validation (AVAL) is to demonstrate that an acquired product or service fulfills its intended use when placed in its intended environment.

-

Acquisition Verification, [ML 3]

The purpose of Acquisition Verification (AVER) is to ensure that selected work products meet their specified requirements.

-

Solicitation and Supplier Agreement Development, [ML 2]

The purpose of Solicitation and Supplier Agreement Development (SSAD) is to prepare a solicitation package, select one or more suppliers to deliver the product or service, and establish and maintain the supplier agreement.

Process Areas Unique to CMMI for Services

-

Capacity and Availability Management, [ML 3]

The purpose of Capacity and Availability Management (CAM) is to ensure effective service system performance and ensure that resources are provided and used effectively to support service requirements.

-

Incident Resolution and Prevention, [ML 3]

The purpose of Incident Resolution and Prevention (IRP) is to ensure timely and effective resolution of service incidents and prevention of service incidents as appropriate.

-

Service Continuity, [ML 3]

The purpose of Service Continuity (SCON) is to establish and maintain plans to ensure continuity of services during and following any significant disruption of normal operations.

-

Service Delivery, [ML 2]

The purpose of Service Delivery (SD) is to deliver services in accordance with service agreements.

-

Service System Development*, [ML 3]

The purpose of Service System Development (SSD) is to analyze, design, develop, integrate, verify, and validate service systems, including service system components, to satisfy existing or anticipated service agreements.

-

*SSD is an "Addition" As such, it is at the organization's discretion whether to implement SSD, and, whether to include SSD in a SCAMPI appraisal.

-

Service System Transition, [ML 3]

The purpose of Service System Transition (SST) is to deploy new or significantly changed service system components while managing their effect on ongoing service delivery.

-

Strategic Service Management, [ML 3]

The purpose of Strategic Service Management (STSM) is to establish and maintain standard services in concert with strategic needs and plans.

How are the processes organized?

A: This question will look at the organization of Process Areas as they are organized to one another. The next FAQ question addresses the elements of each Process Area. Process Areas are organized in two main ways, called "Representations".

- Staged, and

- Continuous

Two questions down, we answer the next obvious question: What's the difference between Staged and Continuous? For now, just trust us when we say that this really doesn't matter except to a very few people and organizations who really geek out over this idea of "pathways" through an improvement journey. Ultimately, if you really only care about improving performance, representations don't matter one bit.

What is each process made up of?

A: Each process area is made of two kinds of goals, two kinds of practices, and a whole lot of informative material.

The two goal types are: Specific Goals and Generic Goals. Which then makes the two practices to also follow suit as: Specific Practices and Generic Practices. Astute readers can probably guess that Specific Goals are made up of Specific Practices and Generic Goals are made up of Generic Practices.

Every Process Area (PA) has at least one Specific Goal (SG), made up of at least two Specific Practices (SPs). The SPs in any PA are unique to that PA, whereas, other than the name of the PA in each of the Generic Practices (GPs), the GPs and Generic Goals (GGs) are identical in every PA. Hence, the term "Generic".

PAs all have anywhere from 1 to 3 Generic Goals -- depending on which model representation (see the previous question) the organization chooses to use, and, the path they intend to be on to mature their process improvement capabilities.

The informative material is very useful, and varies from PA to PA. Readers are well-advised to focus on the Goals and Practices because they are the required and expected components of CMMI when it comes time to be appraised. Again, if improving performance is important to you and appraisals are not something you care about, then these goal-practice relationships and normative/informative philosophies don't really matter at all. Read more about that here. If all you want is improvement, and appraisals are not necessarily important, then it doesn't really matter how the model is organized. Use anything in it to make your operation perform better!

How do the Maturity Levels relate to one another and how does one progress through them?

A: We touch on this subject below but here is some additional thinking about the subject:

Before we get started, it's important to remind readers that the entire CMMI is about improving performance, not about defining processes or performing practices. If you go back to the challenge posed to the original founders of CMM, they wanted greater predictability and confidence that development projects (originally limited to software, but since expanded to any kind of development or services) would be successful. The practices in CMM and later CMMI were intended to provide that confidence. The rationale being that these practices provided the capability to increase performance and the increased performance would lead to better outcomes. Problems started when people saw the practices themselves as the intended outcome and not the increase in performance the practices were supposed to catalyze.

Part of this problem has to do with the evidence required for CMMI appraisals. Our sponsor company also has a problem with the appraisal in this regard. It is entirely possible for an organization to actually perform the practices in CMMI but that the evidence of having performed them to be very brief and difficult to capture. Unfortunately, the way the appraisal is currently defined, such fleeting artifacts are challenging to demonstrate to an appraisal and the value-add of attempting to preserve them is often very low. As you can imagine, the benefits of CMMI extend far beyond the appraisal, and in fact, exist regardless of whether or not an appraisal is performed.

For the remainder of this explanation, we will assume that we are after the performance benefits of CMMI and we are not too worried about the appraisal expectations. We will leave those for others to worry about. There are plenty of experts throughout the world who can answer specific questions about evidence. (Except for our own contributors, of course, we do not endorse any of them and we do not know whether they will give you correct or even satisfactory answers.)

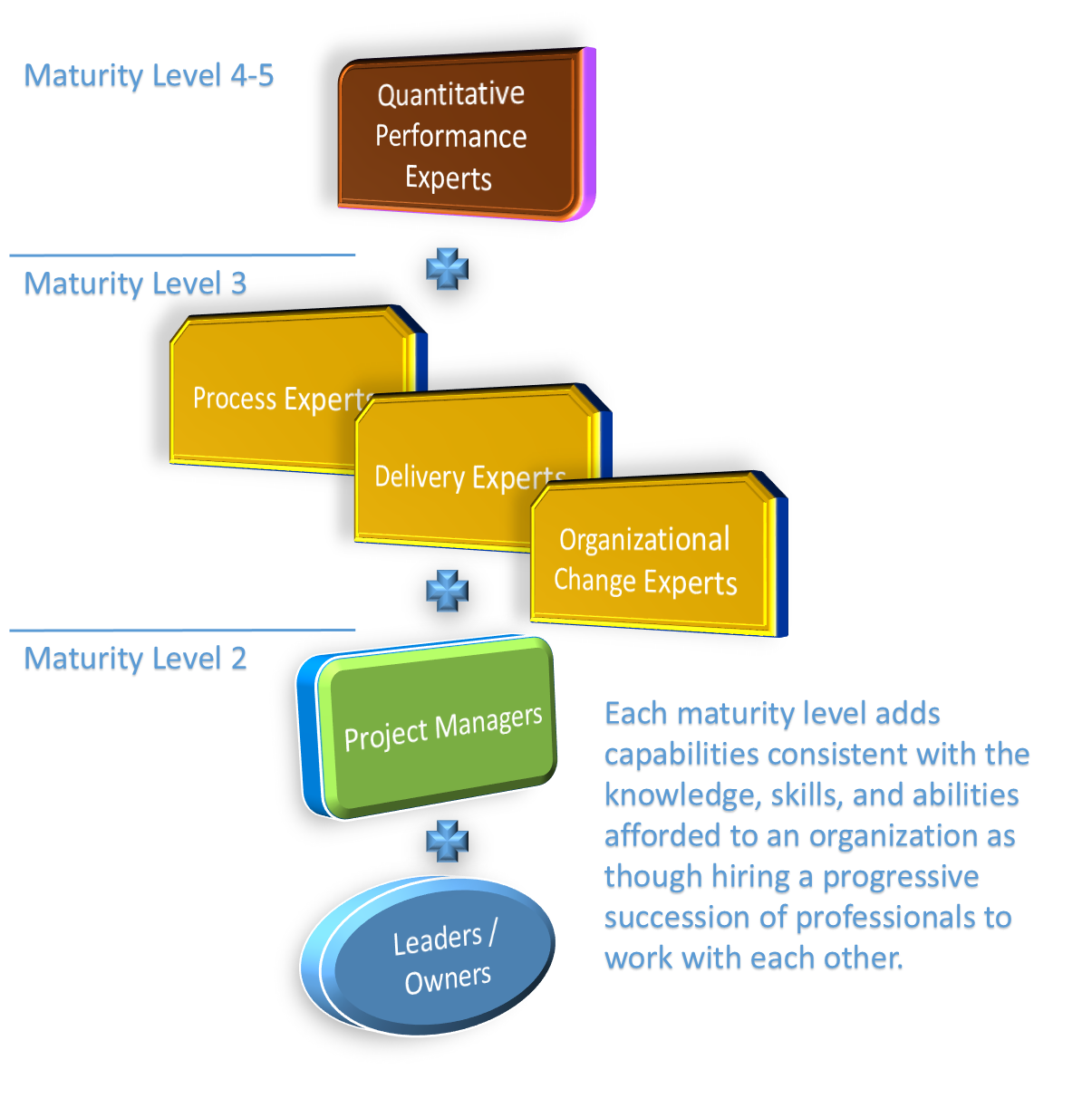

To explain the different Maturity Levels, we use the idea of hiring particular experts. Clearly, using the practices of each Maturity Level does not require hiring such experts! We are only using the idea "hiring experts" to help explain the contents of each Maturity Level. For example, you don't need to hire coach to lose weight. You can read about it in many different books with many different ideas and come up with something that works for you. But, if you did hire a health coach, you would gain the benefit of their expertise without having to figure it out for yourself.

Maturity Level 2 is very very basic. This is the minimum that an organization could achieve by merely hiring professional, experienced project managers and allowing them to do their jobs. These project managers would work directly with the organization's leaders and owners to help projects be successful and the organization to be profitable. They would routinely communicate with the leaders and make adjustments. Companies struggling to incorporate or demonstrate use of the practices in CMMI ML2 are likely to be widely inconsistent with when they deliver, the quality of what they deliver and their profits are likely to be highly unpredictable. Such organizations frequently take on more work than they can handle. They then proceed to do a poor job of planning the level of effort and dependencies required to complete the work. When projects don't meet financial or customer expectations, companies who don't perform ML2 practices don't know where to begin to start to understand why and they typically turn to specific people to try to figure out what went wrong. Very often people (internally or customers) get blamed for the missed expectations rather than realizing that the problems really started with their own lack of situational awareness. ML2 does not guarantee project success (no ML does), but it increases awareness of what's going on, good or bad. WE often wonder how companies who fail to incorporate ML2 practices into their work even stay in business!

Organizations using ML2 practices mostly use data and metrics to ensure their projects are on budget, on time, and people are doing the work being asked of them. Organizations without the issues we describe above often perform the ML2 practices without realizing they are doing so.

Maturity Level 3 has quite a bit more going on. An organization with people who are experts in:

- process management,

- the technical/operational work of delivery (development or services, etc.), and

- organizational change and development

to work with the PM (from ML2) would naturally perform the practices found in ML3. The reason to add such people is to:

- facilitate communication and coordination throughout the organization and to learn and share observations from the successes and failures of other projects,

- establish performance norms for how to do the materially core work of the organization, and

- put in place the mechanisms for continuous improvement, learning, strategic growth, and decision-making.

Furthermore, you would have the PM involved in ensuring the time and effort required to look across the organization is not used-up by the projects. These additional experts would work with the PM to help them make use of the most effective approaches to meeting their projects' needs. While projects often don't provide useful performance data until near the end of the work, these new experts would help the organization's leaders understand how well the organization is performing from the inside even while projects are in the middle of execution. ML3 organizations use data and metrics to help understand their internal costs and effectiveness. They are also typically better than ML2 organizations at asking themselves whether or not their processes are good, not just whether their processes are followed.

Companies who don't perform ML3 practices well may have problems coordinating across projects. They are also likely to experience issues with accounting for risks and other critical decisions. They will often have inefficiencies in many places including the technical or operational work but they will be unaware of these inefficiencies or how these inefficiencies become problems both for the projects and for the company's profits. Companies with issues in the ML3 practices will often be playing "catch up" with opportunities due to insufficient consideration of training as a strategic enabler. Similarly, they will often experience slow and tedious communication and decision-making that further slows their ability to be responsive to the market or their customers. Companies without these issues are likely to already handle CMMI ML3 practices in some way.

Maturity Levels 4 and 5 are very unique. We consider them together because there's nearly no reason to separate them in actual practice. Together, ML4 and ML5 are called "high maturity" ("HM") in CMMI. And we look at ML4 and ML5 together as the set of practices organizations incorporate to help them become "high performance operations" ("HPO"). The type of expert(s) added to an ML3 organization to make them HPOs are experts in using quantitative techniques to manage the tactical, strategic and operational performance of the organization. HM practices take the data from ML2 and ML3 to better help them control variation and to make them more predictable operations. They also use the data to decrease uncertainty and increase confidence in their performance predictions at the project and organizational levels.

Organizations using HM practices are better at forecasting their performance than companies with ML3 capabilities. They are also better at managing their work throughout the organization. HPOs have a greater awareness of how their processes work and whether or not they can rely on their processes to achieve their desired outcomes. Companies who do not operate with HM practices may not be struggling, however, they may have issues with their competitiveness or issues with their gross margin that they are unable to solve. Many companies who operate at ML3 are unlikely to be as competitive as companies with HM practices. Also, ML3 organizations' primary approaches to improving performance is to work on eliminating muda ("waste"), reducing head-count, frequent organizational structure changes, and linearly increasing marginal growth through increased sales. Decision-making among ML3 organizations is typified by "deterministic" approaches. On the other hand, HPOs improve performance through use of process performance models, strategic investment in processes and tools and they use a "probabilistic" decision-making approach.

Again, it is critical to point out that these explanations are not necessarily sufficient to produce material for an appraisal. We also remind you that our description of "hiring experts" is not actually about hiring anyone. It is merely a metaphor to explain that people with certain skills will do certain things when allowed to do their jobs. If someone were to observe what they do, the observer would find the CMMI practices likely embedded into their work.

What's the difference between Staged and Continuous?

A: It's just different ways of looking at the same basic objects...

The main difference is simply how the model is organized around the path towards process improvement that an organization can take. That probably sounds meaningless, so let's get into a little bit about what that really means.

The SEI, based on the original idea behind the CMM for Software, promoted the notion that there are more fundamental and more advanced (key)* process areas that organizations should endeavor to get good at on the way to maturing their processes towards higher and higher capabilities. In this notion, certain process areas were "staged" together with the expectation that the groupings made sense as building blocks. Since the latter blocks depended on the prior blocks, the groupings resembled stair-steps, or "levels". The idea then was that the first level didn't include any process areas, and that the first staging of (K)PAs* (the actual "level 2") was a set of very fundamental practices that alone could make a significant difference in performance.

From there, the next staging of PAs, or "level 3", could begin to exploit the foundational PAs and begin to affect process improvement changes from a more detailed technical and managerial perspective. Whereas, up through Level 3, where PAs had some degree of autonomy from one another, Levels 4 and 5 add Process Areas that look across all the other process areas as well as other activities not exclusively limited to process-area-related efforts. While Levels 4 and 5 only add a total of four PAs, they are not in the least trivial. They add the maturity and capability to manage processes by numbers rather than only by subjective feedback, and they add the ability to optimize and continuously improve process across the board based on a statistically-backed quantitative understanding of effort and process performance.

Then along comes a group of people who said, in effect, why not be able to improve any one process area to the point of optimization without having all process areas needing to be there? In fact, why not be able to focus on process areas with high value to the organization first and then go after other process areas, or maybe even ignore any process areas that we don't really need to improve?

In the staged representation, which is the original Software CMM approach, this ability to mature a capability in any one process area doesn't exist, so in CMMI, the idea of a Continuous representation was taken from a short-lived "Systems Engineering" CMM and implemented -- whereby an organization could choose to get really really good at any number of PAs without having to put forth the effort to implement low-value or unused PAs. This becomes especially meaningful to organizations that want to be able to benchmark themselves (or be formally rated) in only areas that matter to them.

For example, an organization is expert at performing activities in the Verification (VER) process area. They want to be world-renoun for it. In fact, they already are. But they'd like to not only create turn-key verification activities, they want it down to a science for financial and logistical reasons. They want to be running verification at a continually optimizing pace. Without the continuous representation, there'd be no way in CMMI to either work towards this continually optimizing state nor a way to gain any recognition for it.

So, to understand the Continuous representation of the model, it should be enough to know that this representation allows organizations to pick any number of process areas, and also pick to whatever depth of capability they want to become in those process areas. The key determinant in such a capability lies in the Generic Goals. As we will cover in the next question, the "level" of capability of an organization using the Continuous representation has to do with the Generic Goal they've institutionalized, not the number or mix of PAs.

*In the original CMM for Software, the process areas were called "Key Process Areas", or KPAs, and, there was no distinction between types of levels (see below), therefore there was only one type of level, and when someone said "level 3" everyone understood. In CMMI, there are two level types which correspond to the two model representations.(see below) Saying, "level" in the context of CMMI is incomplete. However, for anyone reading this FAQ from the beginning, this concept has not yet been introduced, and we didn't want to start adding terms that had not yet been defined.

What's the difference between Maturity Level and Capability Level?

A: They are different ways of rating your process areas...

Let's start with the basics. A "Maturity Level" is what you can be appraised to and rated as when the organization uses the Staged Representation of the CMMI, and a "Capability Level" is what you can be appraised to and rated as when the organization uses the Continuous Representation of the CMMI. As for the details...

A "Maturity Level" X means that an organization, when appraised, was found to be satisfying the goals required by process areas in that level (X). Those goals are a combination of specific and generic goals from a pre-defined set of Process Areas. Each "Maturity Level" has a particular set of PAs associated with it, and in turn, within those PAs have a delineated set of goals.

Maturity Level 2 (ML 2) in CMMI for Development requires the following PAs be performed up to and including Generic Goal 2 within them:

- Requirements Management (REQM)

- Project Planning (PP)

- Project Monitoring and Control (PMC)

- Supplier Agreement Management (SAM)

- Measurement and Analysis (MA)

- Process and Product Quality Assurance (PPQA), and

- Configuration Management (CM)

Maturity Level 3 (ML 3) in CMMI for Development requires the ML 2 PAs, plus the following PAs be performed up to and including Generic Goal 3 within all of them:

- Requirements Development (RD)

- Technical Solution (TS)

- Product Integration (PI)

- Verification (VER)

- Validation (VAL)

- Organizational Process Focus (OPF)

- Organizational Process Definition (OPD)

- Organizational Training (OT)

- Integrated Project Management (IPM)

- Risk Management (RSKM), and

- Decision Analysis and Resolution (DAR)

Maturity Level 4 (ML 4) requires the ML 2 and 3 PAs, plus the following PAs be performed up to and including Generic Goal 3 within all of them:

- Organizational Process Performance (OPP) and

- Quantitative Project Management (QPM)

And finally, Maturity Level 5 (ML 5) requires the ML 2-4 PAs, plus the following PAs be performed up to and including Generic Goal 3 within all of them:

- Organizational Performance Management (OPM) and

- Causal Analysis and Resolution (CAR)

For CMMI for Services and CMMI for Acquisition, the idea is the same, only some of the process areas are swapped out at both ML 2 and ML 3 for their respective disciplines. You can refer back to this question to fill in the blanks on which PAs to swap in/out for CMMI for Services and CMMI for Acquisition at ML2 and ML3. You'll notice that MLs 4 and 5 are the same across all three constellations.

Now, if you recall from the earlier FAQ, the Continuous representation is tied to the Generic Goals, and from above, Capability Levels are attained when using the Continuous representation. So with that, Capability Levels are then tied to the Generic Goals. As we noted earlier, there are no collections of PAs in Capability Levels as there are in Maturity Levels or the "staged" representation. Therefore, it is far simpler to explain that a Capability Level is attained PA by PA. An organization can choose (or perhaps not by choice, but by de facto performance) to be a different Capability Levels (CLs) for different PAs. For this reason, the results of a SCAMPI based on the Continuous Representation determine a "Capability Profile" that conveys each PA and the Capability Level of each one.

Basically, the Capability Level of a PA is the highest Generic Goal at which the organization is capable of operating. Since there is actually 3 Generic Goals, 1-3, an organization can be found to be operating at a Capability Level of ZERO (CL 0), in which they aren't even achieving the first Generic Goal which is simply to "Achieve Specific Goals"

Thus, the three Capability Levels are (in our own words):

- Capability Level 1: The organization achieves the specific goals of the respective process area(s).

- Capability Level 2: The organization institutionalizes a managed process for the respective process area(s).

- Capability Level 3: The organization institutionalizes a defined process for the respective process area(s).

What are the Generic Goals?

a.k.a. What are the differences among the Capability Levels?

a.k.a. What do they mean when they say process institutionalization?

A: The Generic Goals *are*, in fact, perfectly parallel with the Capability Levels. In other words, Generic Goal 1 (GG1) aligns with Capability Level 1 (CL1). GG2 with CL2, and GG3 with CL3. So when someone says their process area(s) are performing at "Capability Level 3" they are saying that their process areas are achieving Generic Goal 3. The Generic Goals are cumulative, so saying that a process area is CL3 (or GG3) includes that they are achieving GG1 and GG2 as well.

Before we get into a discussion about the idea of institutionalization, let's list the Generic Goals:

-

Generic Goal 1 [GG1]: The process supports and enables achievement of the specific goals of the process area by transforming identifiable input work products to produce identifiable output work products.

Generic Practice 1.1 [GP 1.1]: Perform the specific practices of the process to develop work products and provide services to achieve the specific goals of the process area.

-

Generic Goal 2 [GG2]: The process is institutionalized as a managed process.

Generic Practice 2.1 [GP 2.1]: Establish and maintain an organizational policy for planning and performing the process.

Generic Practice 2.2 [GP 2.2]: Establish and maintain the plan for performing the process.

Generic Practice 2.3 [GP 2.3]: Provide adequate resources for performing the process, developing the work products, and providing the services of the process.

Generic Practice 2.4 [GP 2.4]: Assign responsibility and authority for performing the process, developing the work products, and providing the services of the process.

Generic Practice 2.5 [GP 2.5]: Train the people performing or supporting the process as needed.

Generic Practice 2.6 [GP 2.6]: Place selected work products of the process under appropriate levels of control.

Generic Practice 2.7 [GP 2.7]: Identify and involve the relevant stakeholders as planned.

Generic Practice 2.8 [GP 2.8]: Monitor and control the process against the plan for performing the process and take appropriate corrective action.

Generic Practice 2.9 [GP 2.9]: Objectively evaluate adherence of the process against its process description, standards, and procedures, and address noncompliance.

Generic Practice 2.10 [GP 2.10]: Review the activities, status, and results of the process with higher level management and resolve issues.

-

Generic Goal 3 [GG3]: The process is institutionalized as a defined process.

Generic Practice 3.1 [GP 3.1]: Establish and maintain the description of a defined process.

Generic Practice 3.2 [GP 3.2]: Collect work products, measures, measurement results, and improvement information derived from planning and performing the process to support the future use and improvement of the organization's processes and process assets.

So, you're wondering what's this business about institutionalization. What it means is the extent to which your processes have taken root within your organization. It's not just a matter of how widespread the processes are, because institutionalization can take place in even 1-project organizations. So then, it's really about how they're performed, how they're managed, how they're defined, what you measure and control the processes by, and how you go about continuously improving upon them.

If we look at what it takes to manage any effort or project, we will find what it takes to manage a process. Look above at GG2. You'll see that each of those practices are easy to understand if we were discussing projects, but when it comes to processes, people have trouble internalizing these everyday management concepts. All institutionalization reduces to is the ability to conduct process activities with the same rigor that we put forth to effectively execute projects.

The GPs are all about the processes, not necessarily the work of the services or projects performed. GPs make sure the processes are managed and executed and are affected contextually by the work being done but are otherwise agnostic as to the work itself. In other words, you could be executing the processes well/poorly as far as GPs go, but the work itself might still be on/off schedule, budget, etc.

Assume for a moment that the process execution is out of step with the results of the work, in other words for example, that the processes are crappily done, but the work is still on time and on budget and the customers are happy. That's possible, right? What it tells us is that the processes are actually garbage and wasteful, that they have no beneficial impact on the work.

GPs ensure the processes are (defined and) managed as though you'd (define and) manage the work. When they're out of sync (like in the example above) it tells you that the processes suck and your people are still why your efforts succeed, they're not really influenced by the processes. That, if the processes were to disappear no one would notice, but if key people in the company would disappear, everything would fall apart and the processes can't be relied-upon to carry the load.

It's not that we ever want processes to replace good people, but when even good people aren't supported by good processes and the people are constantly working around the process instead of working with the processes, then you've got risks and issues. Good processes allow good people to think forward and apply themselves in more value-added ways than in reinventing the routine work every time they need to perform it. Good processes also allow the good people to unload busy work to less experienced people while they go off applying their experience to new ideas and improved performance leaving the routine stuff to people who can follow a process to uphold the status quo performance.

The point, here, being that the GPs ensure the processes are working and operating effectively. They're not about the specific work products of the service or project.

These concepts were not as consistently articulated in pre-CMMI versions of CMM. But if all this is still confusing, please let us know where you're hung-up and we'll be happy to try to answer your specific questions.

What's High Maturity About?

a.k.a. What's the fuss about High Maturity Lead Appraisers?

a.k.a. What's the fuss about the informative materials in the High Maturity process areas?

A: "High Maturity" refers to the four process areas that are added to achieve Maturity Levels, 4 and 5:

- Organizational Process Performance (OPP),

- Quantitative Project Management (QPM),

- Organizational Performance Management (OPM), and

- Causal Analysis and Resolution (CAR)

Collectively, these process areas are all about making decisions about projects, work, and processes based on performance numbers, not opinions, not compliance, and eventually not on "rearward-looking" data, rather, forward-looking and predictive analysis.

It's not just any numbers, but numbers that tie directly into the organization's business and performance goals, and not just macro-level goal numbers but numbers that come from very specific, high-fidelity sub-processes that are used by work and projects and can predict a work effort and/or project's performance as well as the process' outcomes.

What enables this sort of quantitative-centric ability are benchmarks about the organization's processes (called process "performance baselines") and the predictive analysis of the organization's processes (called process "performance models"). Together the process performance baselines and models provide the organization with an idea of what their processes are really up to and what they can really do for the bottom line. This is not usually based on macro-level activities, but are based on activities for which nearly every contributing factor to the process is known, quantifiable, within the organization's control and measured.

Since process are used by projects and other work, and, since at maturity (and capability) levels of activity beyond level 2 the projects are drawing their processes from a pool of defined practices, the ability to create processes for projects and other work, and to manage performance of both the work/project activities and process activities is facilitated by the quantification of both work/project and process performance. This results in process information that simultaneously supports work/project outcomes as well as provides insight into how well the processes are performing.

In order, however, for the process data to provide value, they must be stable and in control. Determining whether processes are stable and in control is usually a matter of statistical analysis. Therefore, at the core of all this quantification is a significant presence of statistics, without which process data is not always trustworthy (remember: high maturity, it's different at ML2 and 3), and that data's ability to predict outcomes is tenuous, at best.

The eventual need and ability to modify the processes similarly exploits the value richness of statistics and higher analytical techniques. By the time an organization is operating at higher levels of maturity, they will expect themselves to rely on hard data and process professionalism to guide them towards developing, testing, implementing process changes.

The fuss about all this is multi-faceted. To name a few facets, we can begin by categorizing them (though the categories are not unrelated to each other) as:

- the only required and expected components of the model are the goals and practice statements, respectively, and

- misunderstanding and/or misinterpretation of the model high maturity practices.

The action to address these facets stems from a flood of findings that many high maturity appraisals didn't accept as evidence those artifacts that convey the proper intent and implementation of these higher maturity concepts were applied at the organizations appraised. In fact, the opposite was found to be true. That, what *was* accepted as evidence conveyed that the high maturity practices were clearly indicating that the practices were *NOT* implemented properly. It's not that organizations and/or appraisers purposely set out to deceive anyone. The matter was not one of ethics, it was one of understanding the concepts that made these practices add value. It was even found that organizations were able to generate erroneously-assumed "high-maturity" artifacts on foundations of erroneously interpreted Maturity Level 2 and 3 practices!

The crux of the matter is/was that none of the practices of the model at any level are entirely stand-alone. They (nearly) all have some informative material that provide context and edification for the practice statements. Since CMMI is a model, and, since all models aren't complete -- by definition -- the most any model user can hope for is edification, explanation, and examples of use. The CMMI's informative material provide this.

At maturity levels 2 and 3, many of the practices are not foreign to people with some process discipline experience such as change control, project management, peer reviews and process checks. They may yet be a bit arcane for some, therefore explanations are provided; the practices at the lower-numbered maturity levels enjoy a wide array of people who can understand and perform them to satisfy the goals of process areas without additional explanation. (Think of it this way: more people know algebra than differential equations, but for some people, even algebra is a stretch.) Practical methods and alternative implementations for these practices abound.

This seems not the case with higher maturity practices in maturity levels 4 and 5. The practices of "high maturity" activities require a different set of skills and methods that few individuals applying CMMI ever have the need or opportunity to acquire. These would be the "differential equations" population in our prior analogy. Thus, the informative materials (namely, sub-practices and typical work products) become far more important -- not towards the required artifacts of an appraisal, but for the proper implementation of what's intended by the practices.

An analogy used elsewhere bears repeating here: want to implement ML2 and ML3? Hire a project manager, a really good operations person in your type of work (e.g., development or services), and a process improvement specialist. Want to implement ML 4 and 5? Team up the first three with a process improvement specialist with expertise in statistical process control, operations research, lean thinking (such as design of experiments) and skills in advanced analytical techniques. For people who can claim the latter on their resumé's, little, if anything in ML4 & ML5 is new to them. ML 4 & 5 bear much resemblance to Six Sigma activities.

To address these findings, SEI created a certification for lead appraisers, and a class for anyone looking to implement high maturity concepts. The certification is intended to ensure that lead appraisers performing high maturity appraisals understand what the concepts mean and what they need to look for, and the course provides deeper insight and examples about the practices and concepts.

Although there are many lead appraisers providing consulting to clients about high maturity concepts, only CMMI-Institute-Certified High Maturity Lead Appraisers ("HMLAs") can perform the appraisals to those levels. Although a blanket statement would be highly inappropriate, there have been clear, well-documented, cases of non-HMLAs providing very poor advice on high-maturity implementations. This FAQ strongly recommends that any organization pursuing high maturity take advantage of some consulting by an CMMI-Institute-Certified HMLA well before attempting a SCAMPI to those levels. Having said that, organizations should still take steps to ensure they hire a good-fitting lead appraiser.

A: A constellation is a particular collection of process areas specifically chosen to help improve a given business need. Currently there are three (3) constellations:

- Development: For improving the development of (product or complex service) solutions.

- Acquisition: For improving the purchasing of products, services and/or solutions.

- Services: For improving delivery of services and creation of service systems (say, to operate a solution but not buy it or build it in the first place).

- RD

- TS

- PI

- VER

- VAL, and

- SAM

A quick reminder that the process ares listed here are for the DEV constellation only. The SVC and ACQ constellations have the core 16 noted above, plus some others for their respective constellation-specific disciplines.

How many different ways are there to implement CMMI?

A: Infinite, but 2 are most common.

But before we get into that, let's set the record straight. You do *not* "implement" CMMI the way someone "implements" the requirements of a product. The only thing getting "implemented" are your organization's work flows along with whatever "standard processes" and associated procedures your organization feels are appropriate--not what's in CMMI. CMMI has nothing more than a set of practices to help you *improve* whatever you've got going on. CAUTION: If whatever you've got going on is garbage, CMMI is unlikely to help. AND, if you create your organization's processes only using CMMI's practices as a template you'll not only never get anything of value done but your organization's work flows will be dreadfully lacking all the important and necessary activities to operate the business!

Let's say that again: You need to know what makes your business work. You need to know how to get work done. You need to know what your own work flows are BEFORE you will get anything good from CMMI. CMMI is awful as a process template! The *BEST* way to use any CMMI practice is to read that practice and ask yourself any of the following questions:

- "Where in our workflow does *that* happen?"

- "How does *that* show up?"

- "What do we do that accomplishes *that*?"

- Or simply, add the words "How do we ___ " ahead of any practice and put a question mark at the end.

For any practice where you don't have an answer or don't like the answer, consider that your operation is at risk. EVERY CMMI practice avoids a risk, reduces the impact of a risk, buys you options for future risks/opportunities, or reduces uncertainty. EVERY.ONE. You might need a bit of expert guidance to help you refactor the practice so that it appears more relevant and useful to your particular needs, but there is a value-add or other benefit to every practice. Truly.

(Admittedly, whether or not there's value to *your* business to modify your behavior to realize the benefit of a given practice is an entirely different question.)

Now, as far as the "2 most common approaches". There's what we call the blunt-object (or silo'd or stove-piped) approach, which is, unfortunately, what seems to be the most common approach in our observation. In this approach CMMI is implemented with the grace and finesse of a heavy, blunt object at the end of a long lever -- impacting development organizations and managers' collective craniums. This is most commonly found among organizations who care not one wit about actual performance improvement and only care about advertising their ratings.

And then, there's the reality-based approach. In which, processes are implemented in such a way that work and service personnel may not even know it's happening. Can you guess which one we advocate?

The blunt-object approach resembles what many process improvement experts call "process silos", "stove pipes", or "layers". This approach is also often implemented *to* a development team *by* some external process entity with brute force and very extreme prejudice. So, not only does the blunt approach employ some very unsavory techniques, subjecting its royal subjects to cruel and unusual process punishment, it also (in its design) is characterized by a "look and feel" of a process where each process is in its own vacuum, without any connection to other processes (or to reality, for that matter), and where the practices of the processes are somehow expected to be performed serially, from one to the next, in the absence of any other organizational context.

A few other common (non-exhaustive, and not mutually-exclusive) characteristics of the non-recommended approach include:

- Heavy emphasis on compliance irrespective of performance.

- Little or no input from staff on what the processes should be.

- Using CMMI practices as project or process "requirements".

- Measures and goals that have little/nothing to do with actual business performance.

- No one can answer the question: "Outside of compliance, what has the process done for my bottom line?"

- Complaints about the "cost of compliance" from people who actually watch things like the bottom line.

If so many implementations of CMMI are guided by an (internal or external process) "expert", one might (justifiably) wonder how and why CMMI processes could ever be implemented in such an obviously poorly conceived approach!

There are two (sometimes inter-related) reasons:

- Lack of understanding of the model, and

- Being an expert process auditor, and not a process improvement expert.

We've come across countless examples of organizations' attempts to implement CMMI while being led by someone (or plural) who was at least one of the two types of persons, and too frequently, both at once. Frightening, but true. The jury is still out on whether it's worse to be led by such a non-expert or to attempt "Do-It-Yourself" CMMI implementation. What the jury is definitely in agreement on is that if your focus is on CMMI and not on improving business performance, you're really wasting your time. Again, we digress....

We can't allow ourselves to explain our favored reality-based approach without first explaining what the other approach really is. Not so that our approach looks better, and not because we must justify our approach, but because we feel that it's important for people new to CMMI and/or to process/performance improvement to be prepared to recognize the signs of doom and be able to do something about it before it's too late.

All kidding aside, believe it or not, there are organizations for whom the blunt/silo/stove-pipe approach actually works well, and we wouldn't necessarily recommend that they change it. These organizations tend to share certain characteristics including any number of the following: being larger, bureaucratic by necessity, managing very large and/or complex projects; and, there's an actual, justifiable reason for their approach. In fact, in these cases, the effect is actually neither blunt, nor particularly silo'd, but these types of organizations have other mechanisms for "softening" the effect that such an approach would have on smaller projects/organizations. And, that is precisely, how we can characterize the main difference between the two approaches: we believe that the reality-based approach to implementing CMMI works well in most types of organizations and work/projects of most scope, where the brute-force approach would not.

What does the blunt/brute-force/silo/stove-pipe approach look like?

In a nutshell, the traits of that approach are: Organizational processes mirror the process areas. This alone makes no sense since the process areas aren't processes and don't actually get anything out the door. Process area description documents are prescriptive and implementation of the processes do not easily account for the inter-relatedness of the process areas to one another, or of the generic practices to the specific practices. Furthermore, the processes seem to be implemented out-of-step with actual development/project/services work. Nowhere in the descriptions or artifacts of the processes is it clear how and when the process gets done. It's not a matter of poorly written processes, quite the opposite, many of these processes are the exemplar of process documents. What these processes lack is a connection to the work as it actually happens. Without a process subject-matter expert on hand, it's unlikely that the process would actually get done. In many cases (thanks to the sheer size of the organization) such processes *are*, in fact, done by a process specialist, and not by personnel doing the work.

In other words, with such processes, if an organization doesn't have the luxury of process specialists to do the process work, it would be difficult for someone actually doing the real work who is trying to follow the processes to see how the process activities relate to his or her activities and/or to see when/where/how to implement the process activities on actual tasks at hand. Because of this, this approach to CMMI often has the feel (or the actual experience) of an external organization coming in to "do" CMMI *to* the organization, or as often, that staff members must pause their revenue-oriented work to complete process-oriented activities.

Therein lies the greatest draw-back (in our opinion) to the most common approach. Instead of process improvement being an integral and transparent characteristic of everyday work, it becomes a non-productive layer of overhead activity superimposed on top of "real" work. And yet, this seems to be the prevalent way of implementing CMMI! Crazy, huh?

Why is it so prevalent?

That's where the two reasons of poor implementation, above, come in. People who don't understand the model as well as people who are not process experts (and therefore may have a weak understanding of the model) don't truly "get" that the model is not prescriptive, and so they attempt to make it a prescription. Auditing and appraising to a prescription is far easier and less ambiguous than auditing and appraising to a robust integrated process infrastructure. Frankly, the "common" approach suits the lowest common denominator of companies and appraisers. Those companies and appraisers who aren't after true improvement, and are only after a level rating, and who are willing (companies -- unknowingly -- (sometimes)) to sacrifice the morale and productivity of their projects for the short-term gain of what becomes a meaningless rating statement.

Alright already! So what's the reality-based approach about?!

The reality-based approach starts with a premise that a successful organization is already doing what it needs to be doing to be successful, and, that process improvement activities can be designed into the organization's existing routines. Furthermore, the reality-based approach also assumes that, as a business, the organization actually *wants* to increase their operational performance. Note the use of "designed into". This is crucial. This means that for reality-based process improvement (reality-based CMMI implementation), the operational activities must be known, they must be definable, and, they must be at work for the organization. Then, activities that achieve the goals of CMMI can be designed into those pre-existing activities.

This whole business of designing process improvement activities into product/project activities illuminates a simple but powerful fact: effective process improvement (CMMI included) requires processes to be engineered. Sadly, a recent Google search on "process engineering" turned up few instances where the search term was associated with software processes, and most of those positive hits were about software products, not process improvement. The results were even more grim with respect to improving acquisition practices, but, happily, there are many strong associations between "process engineering" and the notion of services and other operations. There is hope.